Related Articles

Mechanical Design Q&A

Mechanical Design Question and Answer 1:金属结构的主要形式有哪些?答:有框架结构、容器结构、箱体结构、一般构件结构。2:铆工操作按工序性质可分为几部分…

Hydraulic and Pneumatics 2019, Volume 0, Issue 08

液压与气动 2019, Volume 0, Issue 08液压与气动》创刊于1977年,属技术类学术期刊,是由中国机械工业联合会主管,中国机械工程学会与北京机械工业自动化研究所联合主办,《液压与气动…

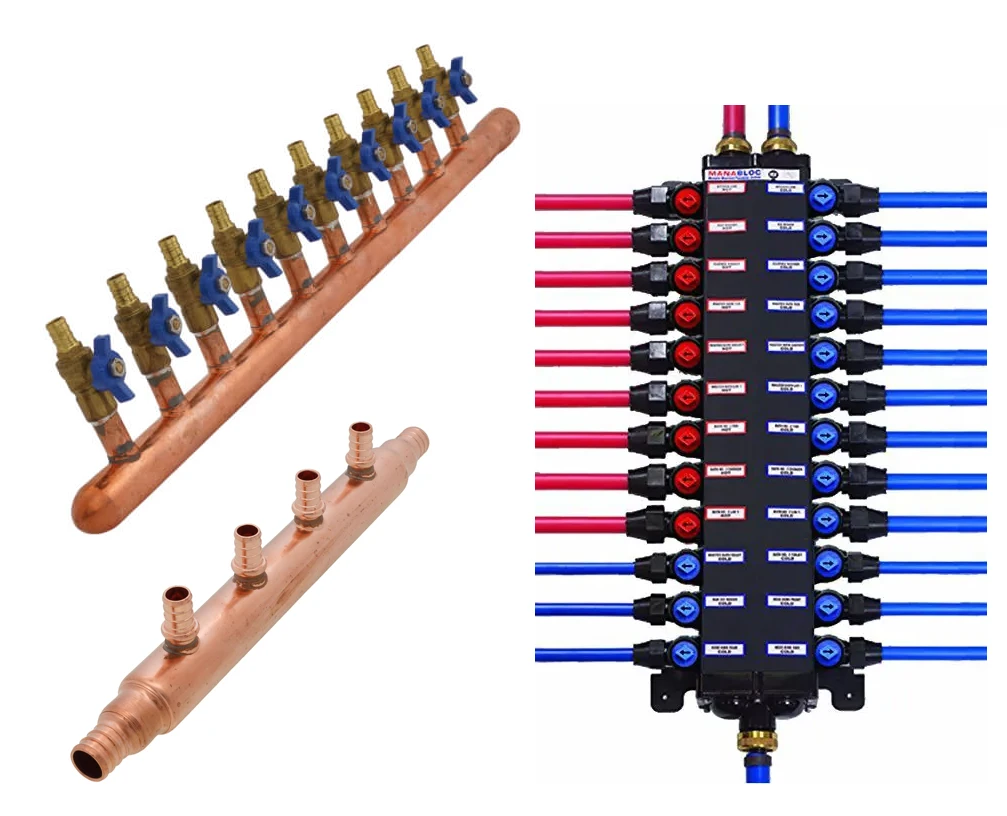

Plumbing - PEX Manifold and Install Guide

Different PEX manifolds serve differen purposes. The home run manifold is meant to serve all or most…

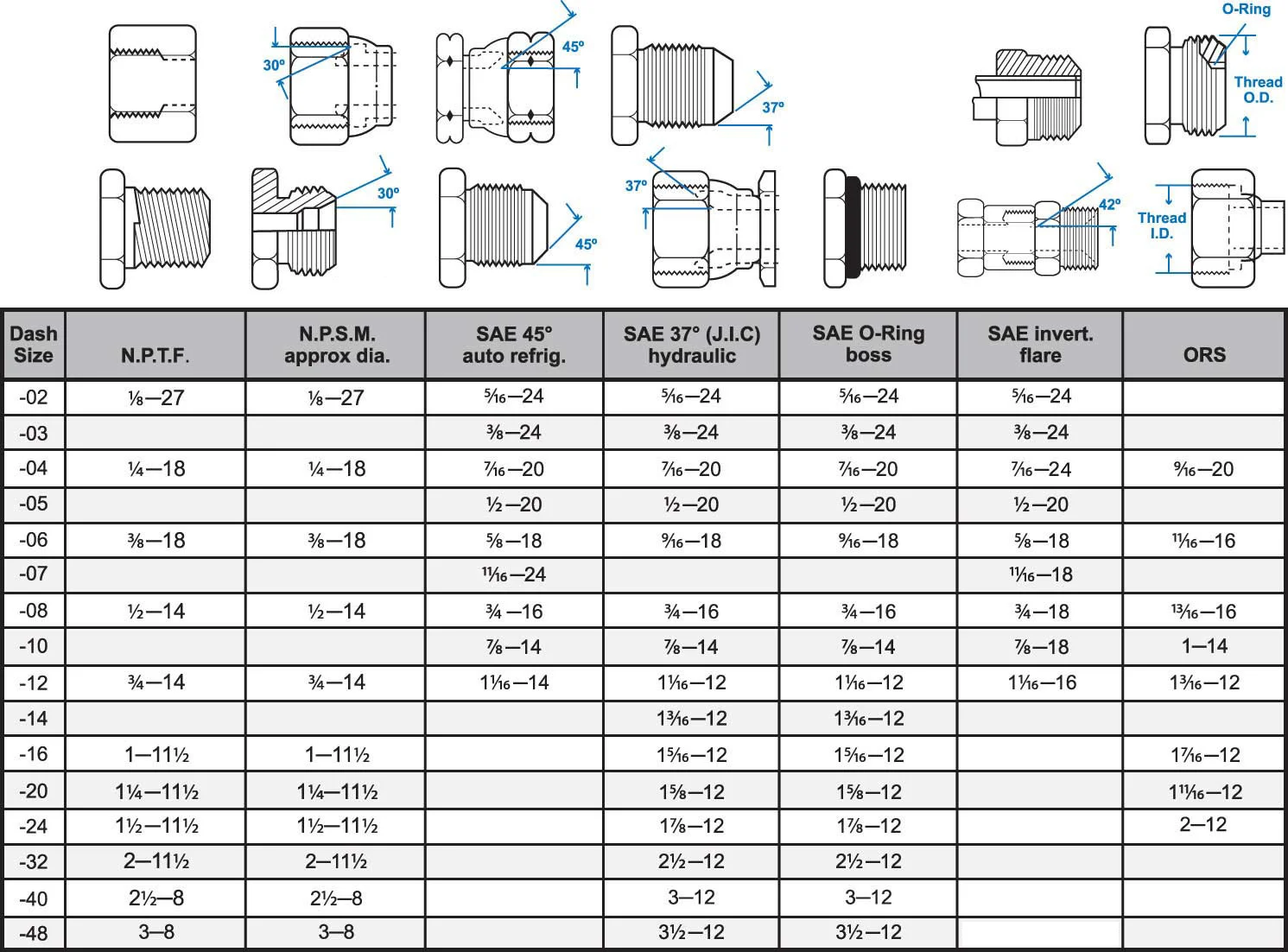

ISO Standard:Hoses and hose assemblies

ISO creates documents that provide requirements, specifications, guidelines or characteris…

</p

</p